Immersion cooling represents a first-of-its-kind decision for many organisations. Unlike air cooling, which benefits from decades of institutional experience, immersion cooling is still maturing as a category. This means the selection and procurement process looks different, requiring deeper technical engagement and the early involvement of specialists to align stakeholders across facilities, IT, and sustainability. However, once operational, these datacentres follow familiar patterns—the novelty is concentrated at the decision stage, not in day-to-day management.

The Shift in Operational Monitoring

The foundational disciplines of datacentre operations—availability, redundancy, and oversight—remain intact. What changes are the interfaces. Instead of monitoring environmental proxies like humidity and airflow distribution, operators focus on direct thermal KPIs. This shift from "interpreting signals" to "direct control" results in dashboards that are significantly more stable and predictable.

Strategic Operational Changes

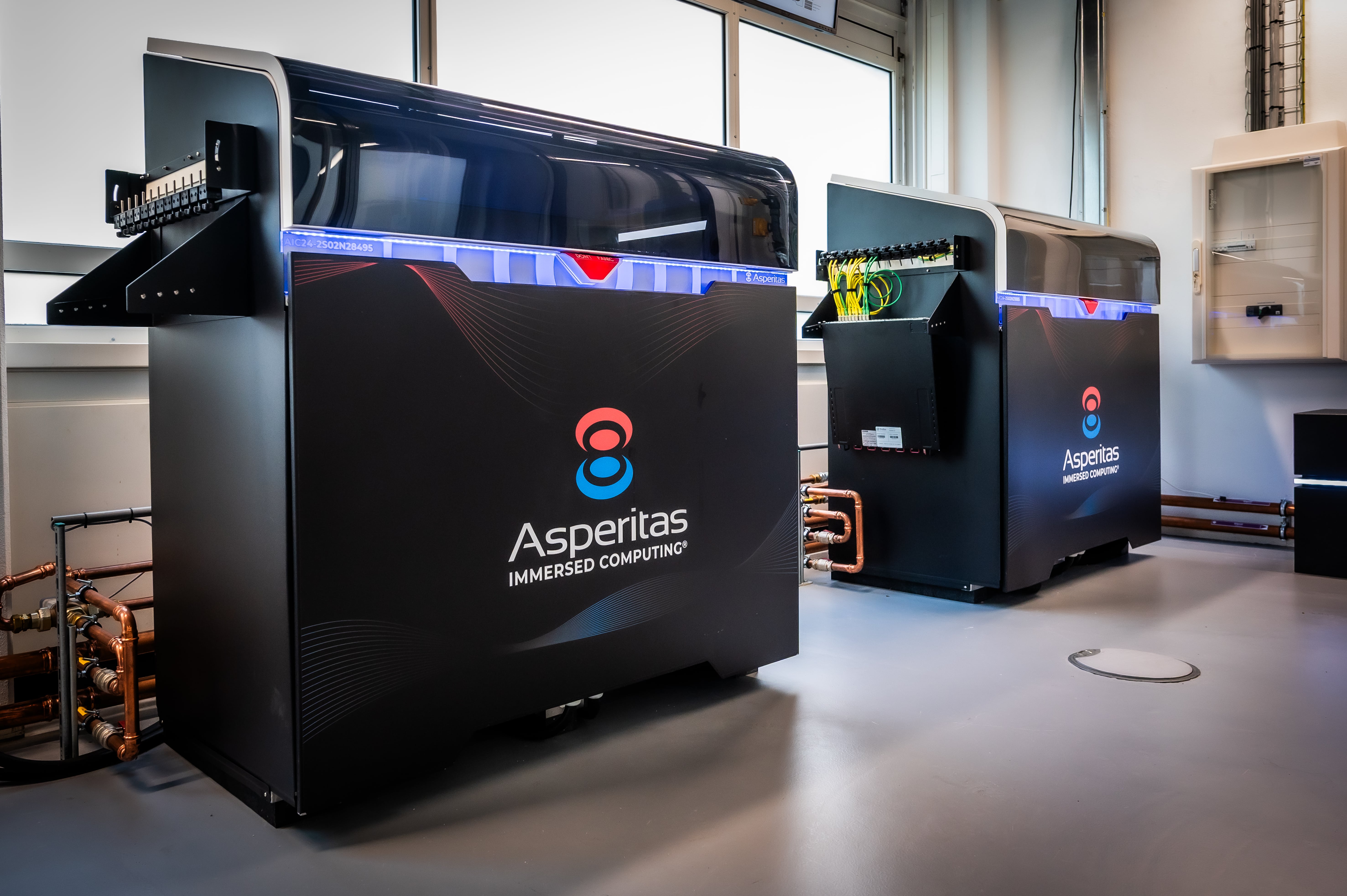

The transition to immersion cooling introduces specific logistical workflows, particularly regarding dielectric fluids and the physical servicing of IT equipment.

Fluid Logistics and Commissioning

During the installation phase, the primary change is the introduction of fluid delivery, storage, and filling procedures. Once the system is filled, the dielectric fluid is largely a "set and forget" component. However, the initial commissioning ensures the system enters a "known-good" state that is far more predictable than balancing air pressures in a traditional room.

Component Maintenance and Servicing

In an immersion environment, servers are lifted and drained rather than slid horizontally from racks. This creates a calmer, more deliberate servicing environment for technicians and eliminates the noise and high-velocity air movement typical of traditional data halls. While this requires specific tools like hoists or service trolleys, it significantly improves working conditions.

Alignment with Established Frameworks

Because immersion cooling is a maturing category, alignment with industry standards is critical for long-term reliability and multi-vendor interoperability.

Managing the Human and Organizational Transition

The biggest hurdle is often cultural rather than technical. Operating an immersion-cooled facility requires early alignment between the Facilities and IT teams.

- Stakeholder Engagement: Early involvement of facility managers is essential to define the heat rejection strategy, especially for waste heat reuse.

- Training and Safety: While dielectric fluids are non-toxic, teams must be trained on spill prevention and specific protocols required when opening a tank.

- Predictability as a Goal: For operators accustomed to airflow variability, the transition to stable, deterministic dashboards is the ultimate sign of operational success.

Conclusion

Immersion cooling changes the beginning of the datacentre lifecycle more than the end. Selection requires deeper technical diligence and installation introduces fluid logistics, but once commissioned, the systems behave in deterministic ways. By following established frameworks like OCP and ASHRAE, operators can deploy immersion cooling with the same confidence as traditional high-availability infrastructure.